Media streaming is the operation of continuously retrieving and processing multimedia data from a certain source. It is an alternative to file downloading. End user should obtain the whole file before interaction in file downloading, whereas the user can start playing media immediately without need of entire file through streaming. Data such as live video stream, audio and video can be streamed this way. There are several protocols available in media streaming like RTSP and WebRTC.

Real-time Streaming Protocol (RTSP)

Real time streaming protocol (RTSP) is an application network protocol that allowsreal time streaming. RTSP is used for operations on the stream such as initiating and terminating. It doesn’t transmit data on its own, rather it leverages RTP and RTCP protocols for this purpose.

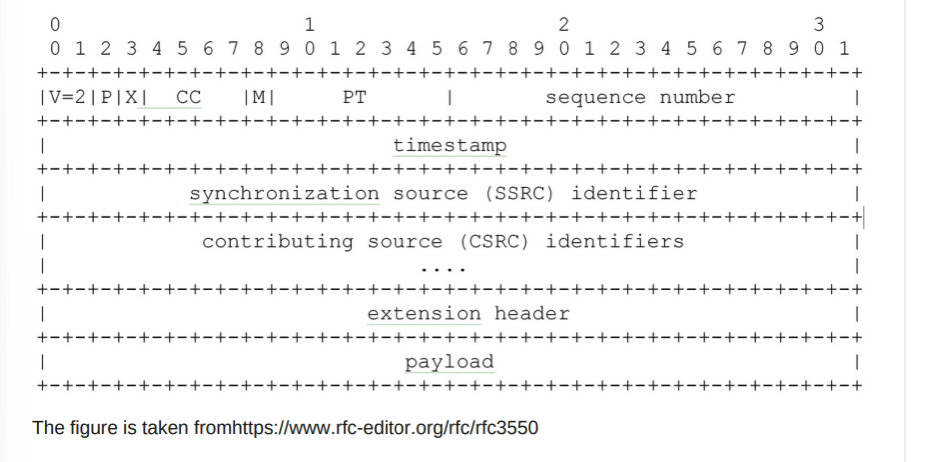

RTP (Real-time transport protocol) packets are made up of header and payload. Payload part contains actual data to be streamed. Inside the header are data such as timestamp and sequence number that give information about payload. Timestamp value shows the time at which packet was formed. However, this timestamp is not absolute but relative. It is related to the time difference between each consecutive frame. In situations where a single frame doesn’t fit one RTP packet it may be transferred through multiple RTP packets, in which case packets belonging to the same frame have the same timestamp. The RTP header has the following format:

RTCP (Real-time transport control protocol) packets are sent at uneven intervals and are used in order to monitor the status of data transfers alongside RTP packets. Various packets are transferred with RTCP. Sender report (SR) and receiver report (RR) are main packets that are sent, and their function is to send and receive statistics from source. Sender reports contain NTP (Network Time Protocol) time and RTP time. NTP time is the absolute time (or wallclock) of RTSP server (or live camera) whereas RTP time refers to RTP packets’ (reference time).

RTCPSender report has the following format:

It is possible to calculate frame absolute time using these three times and clock rate of RTSP server as follows:

FRAME_TS= LAST_NTP + (RTP — LAST_RTP) / 90000

Where;

-RTP is the frame timestamp received via RTP header

-LAST_NTP is NTP clock time received via RTCP Sender Report

-LAST_RTP is a reference RTP time received via RTCP Sender Report

-90000 is required because the RTP timestamps increment with 90 kHz per RTSP specification.

That means the RTP timestamp increments by 90000 every second. This value may vary for the different clock rates.

GStreamer

GStreamer is a very powerful tool that can be used in stream processing. It works with pipelines which consist of elements and where these elements interact with each other through buffer and event structures.

Elements are simple building blocks, each of which is a black box. Every decoder, encoder, demuxer, video or audio output inside the pipeline are actually elements. In the case of a decoder element, for instance, one would input encoded data, which leads to decoded data ending up being output. While actual media data is contained in buffers, control information such as seeking information and end-of-stream notifiers are contained in events. Every element uses pads with various capabilities to communicate with outside. There are two kinds of pads, sinks and sources. Sink pads can be considered the input of the element and source pads the output of the element.

With structures called bin, a single logical element can be created by bringing together related elements. Bins are actually elements themselves. Therefore, they have all the properties of the element.

GStreamer can be run with commandline interface, and also provides API reference. Below is a sample GStreamer pipeline running with the commandline interface. According to this pipeline, video is captured from v4l2 devices, like webcams and tv cards with v4l2src element. The received images is decoded with jpegdec and rendered to a drawable on a local display with xvimagesink.

Timestamp Calculation With GStreamer

The power of GStreamer brings usage difficulties in some cases. For instance, If you want to try something custom like calculating the absolute timestamp of the frames in the stream, this can be a bit tiring because of the many unknowns such as;

-which element provides the RTCP packet

-which element provides the RTP packet header

-will these information be accessed through the element’s buffer or events

-whether the time information obtained is the required RTP time or a time that GStreamer uses in its own operation.

As mentioned above, NTP timestamp and reference RTP time in RTCP packets are needed to calculate the absolute timestamp of a frame. The element via which we can access RTCP packets in GStreamer is the rtpbin element, so the rtpbin element must be included in the pipeline. The rtpbin combines the functions of several elements into a single element allowing for multiple RTP sessions and these will be synchronized together using RTCP SR packets.

Another requirement of calculating absolute timestamp of frames is time values in RTP headers. In GStreamer, these time values can be reached by adding a probe to the pads of the elements in pipelines. After these three time values are obtained, if the clockrate of the server is also known, absolute timestamp can be calculated for each frame. In the next section, a sample GStreamer pipeline and frame timestamp calculation steps are shown.

Sample Code

The pipeline below consists of rtspsrc, rtph264depay and appsink elements (Note that the pipeline is simplified for better understanding). While rtspsrc makes a connection to an RTSP server and reads the data, rtph264depay extracts H264 video from RTP packets. Also, appsink makes the application get a handle on GStreamer data. The rtpbin element is not included in this pipeline, but it will be created as rtspsrc manager.

First of all, we need to capture and connect the creation signal of the rtpbin element so that we can access it. In order to do this, rtspsrc element is taken from pipeline as in line 5. This element produces a “new-manager” signal after creating the manager. For the rtpbin element, a callback function is connected to this signal as in the 6th line. We will use the session information that we obtain in this function to access the RTCP SR.

Then we need to access the time values in the RTP packets. For this purpose, we can add a probe to the sink pad of the rtph264depay element. The rtph264depay element as in line 8 and the sink pad of this element as in line 9 are taken. We can connect the function by adding a probe to this obtained pad as in line 10.

After the above settings, when the rtpbin element is created, it will be passed to our callback function as a manager parameter. Here we can capture incoming RTCP packets using sessions. In order to reach the session, a pad of that session should be requested as in line 14. Also, as in line 15, internal session should be emitted. We should connect to the “on-receiving-rtcp” signal through a new callback function. Thus, the callback function will be called every time a new RTCP packet arrives.

A buffer of type Gst.Buffer is passed to the function. This buffer must be mapped to the RTCP buffer as in line 21 so that RTCP packets can be accessed. We know that packets can also be received with RTCP other than sender report. Therefore, those with sender reports should be taken from the incoming packets. We can get the first packet via the RTCP buffer with get_first_packet as in line 23. Subsequent packets can also be accessed as in line 30. As in line 27, the sender report itself can be accessed through the packet. The sample Sender Report arriving in the GStreamer buffer is “(ssrc=1644170567, ntptime=16645962074316441600, rtptime=2847690, packet_count=475, octet_count=623890)”. NTP and RTP time values from this report should be saved for later use.

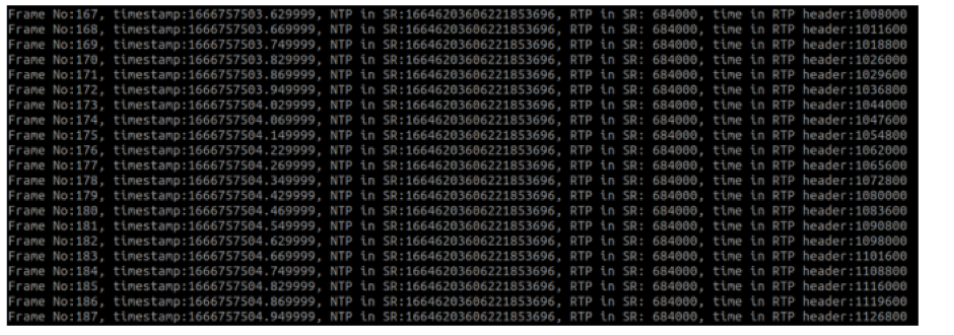

After obtaining the NTP and RTP reference time in the RTCP Sender Report, the next thing is to get the time value in the RTP packets. For this, the “calculate_timestamp” function that we added as a probe to the sink pad of the rtph264depay element can be used. GST.Buffer can be accessed with the info that passed as a parameter to the function. This buffer should be mapped as in the on_receiving_rtcp_callback function. However, this time instead of RTCPBuffer, it is necessary to map to RTPBuffer as in line 35. With the resulting RTP buffer, the time value is accessed with “RTCPBuffer .get_timestamp()” as in line 39. As mentioned before, the same timestamp values can be seen several times depending on frame size, since the frames that do not fit in a single packet are split into multiple packets. By using the last NTP and RTP times in SR with RTP time in RTP header and also clock rate we have obtained, the absolute time value of each frame can be calculated with the formula stated above, as in the 39–40 lines.

Sample calculated frame timestamps are shown below.

To sum up, Gstreamer seems to gives us the result that we want through some simple calculations. However, to be able to do that, it must be known which elements should be present in the pipeline created or in which order they should be brought together and connected. Solving these puzzles requires mastering the GStreamer elements. By reading the docs or experimenting, you can find out what works for your particular purpose.

References

https://gstreamer.freedesktop.org/documentation/index.html

https://stackoverflow.com/a/62962467

https://github.com/LukasBommes/mv-extractor

https://en.wikipedia.org/wiki/Streaming_media

https://forum.huawei.com/enterprise/en/differences-between-rtsp-rtcp-and-rtp/thread/709293-881